Abstract

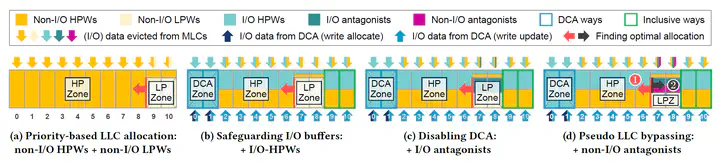

In modern server CPUs, the Last-Level Cache (LLC) serves not only as a victim cache for higher-level private caches but also as a buffer for low-latency DMA transfers between CPU cores and I/O devices through Direct Cache Access (DCA). However, prior work has shown that high-bandwidth network-I/O devices can rapidly flood the LLC with packets, often causing significant contention with co-running workloads. One step further, this work explores hidden microarchitectural properties of the Intel Xeon CPUs, uncovering two previously unrecognized LLC contentions triggered by emerging high-bandwidth I/O devices. Specifically, (C1) DMA- written cache lines in LLC ways designated for DCA (referred to as DCA ways) are migrated to certain LLC ways (denoted as inclusive ways) when accessed by CPU cores, unexpectedly contending with non-I/O cache lines within the inclusive ways. In addition, (C2) high-bandwidth storage-I/O devices, which are increasingly common in datacenter servers, benefit little from DCA while contending with (latency-sensitive) network-I/O devices within DCA ways. To this end, we present A4, a runtime LLC management framework designed to alleviate both (C1) and (C2) among diverse co-running workloads, using a hidden knob and other hardware features implemented in those CPUs. Additionally, we demonstrate that A4 can also alleviate other previously known network-I/O-driven LLC contentions. Overall, it improves the performance of latency-sensitive, high-priority workloads by 51% without notably compromising that of low-priority workloads.